sitemap.xml and robots.txt files are machine-readable files stored in the root folder of a website containing information about which URLs of a website shall be indexed by search engines and which not. The built-in SEO configuration type helps in creating the two machine-readable SEO files as automatically as possible.

With an SEO configuration file you can automatically generate the robots.txt and sitemap.xml files in the root directory of a website. Those files are important for search engine optimisation.

Create a sitemap.xml file in the following way:

- open the Explorer and navigate to the root folder of your website

- click on the wand icon which opens the Create new resource dialog

- choose "Configurations" from the select box and from the list that appears, choose the SEO configuration type

- save the SEO configuration file as "

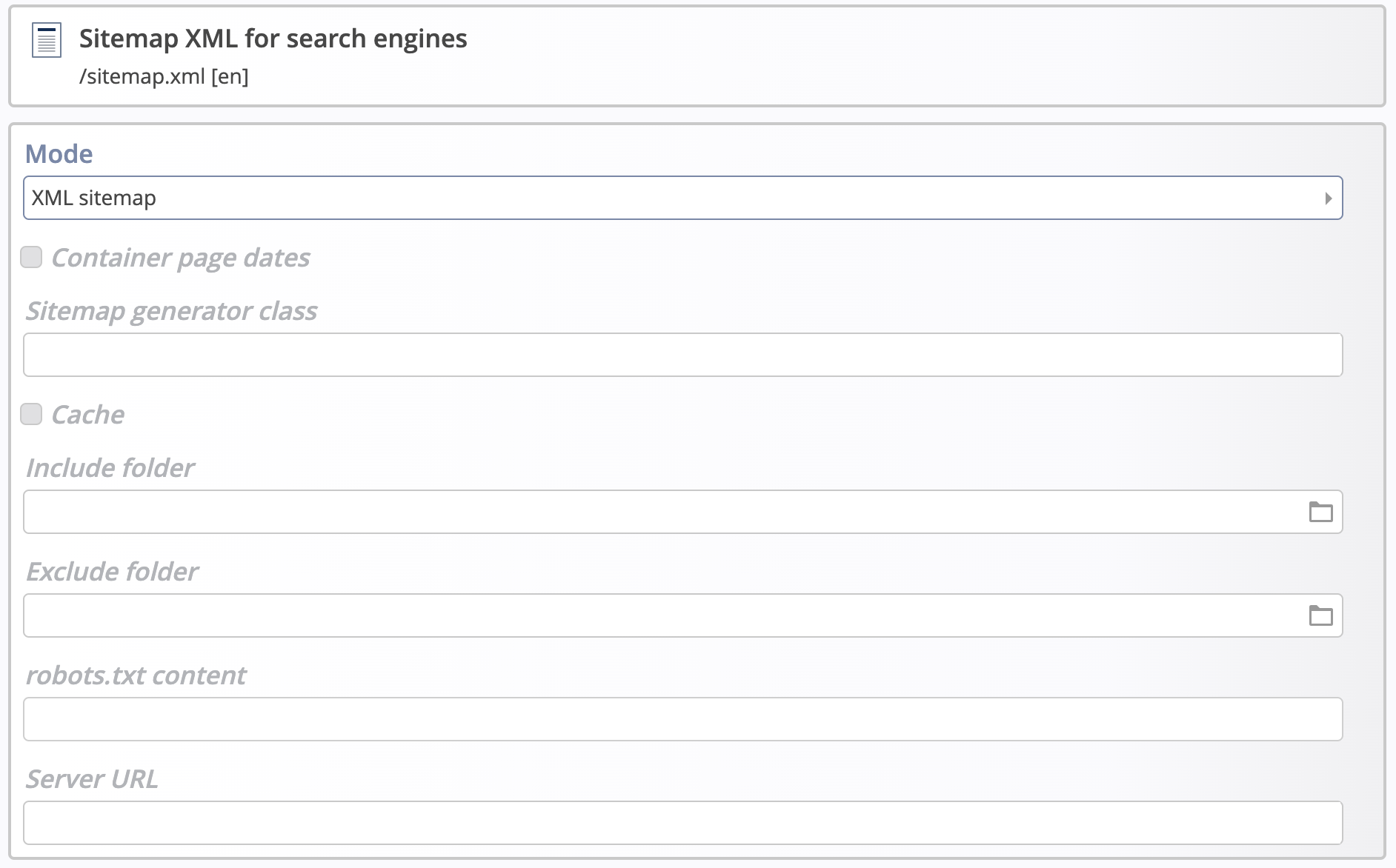

sitemap.xml" - open the newly created file with the content editor and make sure that the "XML sitemap" mode is selected

As a default, a sitemap.xml contains all navigation entries of a website as well as all detail page URLs for all the contents stored in the website.

This default behaviour can be modified in the following way:

Container page dates. If checked, the last modification date of a container page or a content shown on a detail page is included in the sitemap.xml file. There are search engines that use this date to optimize the crawling of a site.

Sitemap generator class. Advanced option if your installation provides a custom Java class for sitemap generation.

Cache. If checked, loads a cached version of the sitemap.xml file, especially useful for large websites, where the generation of the XML sitemap can be a resource intensive task taking a long time; this only works in combination with the scheduled job org.opencms.site.xmlsitemap.CmsUpdateXmlSitemapCacheJob that refreshes the cached file on a regular basis

Include folder. If a folder is selected all container pages and detail contents, including resources from subfolders, will be included in the XML sitemap. Please note that all other folders on the same level or above will no longer appear in the sitemap.xml.

Exclude folder. Allows to exlude container pages and detail contents, including resources from subfolders. This is typically used to exclude certain subfolders from the previously included folder.

robots.txt content. Do not use this field for a sitemap.xml configuration file.

- if a

sitemap.xmlfile is stored in another directory than the root folder of a website, e.g. in a subsite folder (which is not prohibited by the system), this has no effect since search engines only ever search for the file in the root directory - if you open a

sitemap.xmlfile in the browser, the URLs of the online project are always displayed, even if you are in the offline project

Create a robots.txt file in the following way:

- open the Explorer and navigate to the root folder of your website

- click on the wand icon which opens the Create new resource dialog

- choose "Configurations" from the select box and from the list that appears, choose the SEO configuration type

- save the SEO configuration file as "

robots.txt" - open the newly created file with the content editor and choose the "robots.txt" mode

- use the robots.txt content field to define your exclusion rules; alle other fields have no relevance for the robots.txt mode