SEO configuration

With an SEO configuration file you can automatically generate the robots.txt and sitemap.xml files in the root directory of a website. Those files are important for search engine optimisation.

sitemap.xml and robots.txt files are machine-readable files stored in the root folder of a website containing information about which URLs of a website shall be indexed by search engines and which not. The built-in SEO configuration type helps in creating the two machine-readable SEO files as automatically as possible.

Create a sitemap.xml file in the following way:

- open the Explorer and navigate to the root folder of your website

- click on the wand icon which opens the Create new resource dialog

- choose "Configurations" from the select box and from the list that appears, choose the SEO configuration type

- save the SEO configuration file as "

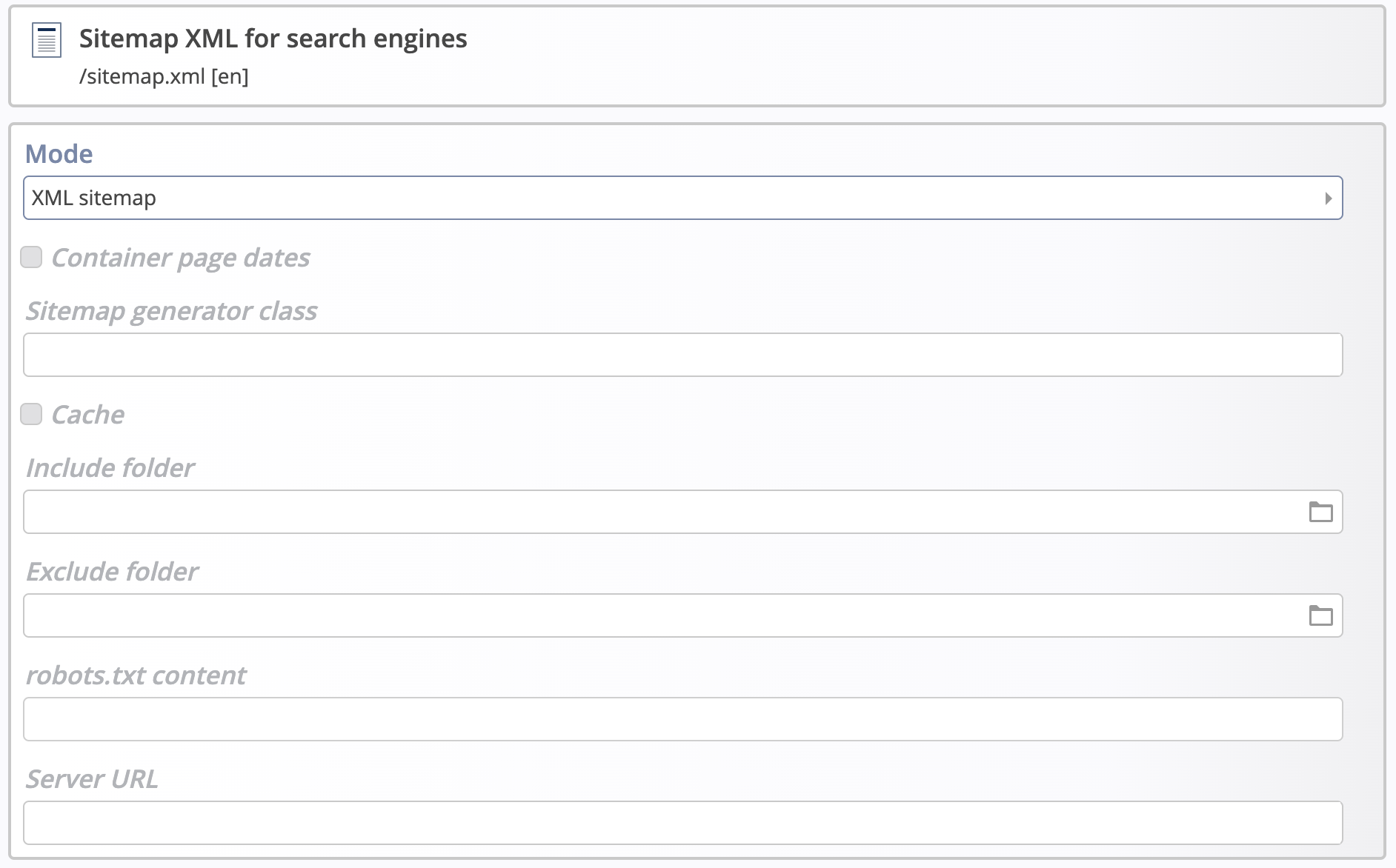

sitemap.xml" - open the newly created file with the content editor and make sure that the "XML sitemap" mode is selected

As a default, a sitemap.xml contains all navigation entries of a website as well as all detail page URLs for all the contents stored in the website.

This default behaviour can be modified in the following way:

Container page dates. If checked, the last modification date of a container page or a content shown on a detail page is included in the sitemap.xml file. There are search engines that use this date to optimize the crawling of a site.

Sitemap generator class. Advanced option if your installation provides a custom Java class for sitemap generation.

Cache. If checked, loads a cached version of the sitemap.xml file, especially useful for large websites, where the generation of the XML sitemap can be a resource intensive task taking a long time; this only works in combination with the scheduled job org.opencms.site.xmlsitemap.CmsUpdateXmlSitemapCacheJob that refreshes the cached file on a regular basis

Include folder. If a folder is selected all container pages and detail contents, including resources from subfolders, will be included in the XML sitemap. Please note that all other folders on the same level or above will no longer appear in the sitemap.xml.

Exclude folder. Allows to exlude container pages and detail contents, including resources from subfolders. This is typically used to exclude certain subfolders from the previously included folder.

robots.txt content. Do not use this field for a sitemap.xml configuration file.

- if a

sitemap.xmlfile is stored in another directory than the root folder of a website, e.g. in a subsite folder (which is not prohibited by the system), this has no effect since search engines only ever search for the file in the root directory - if you open a

sitemap.xmlfile in the browser, the URLs of the online project are always displayed, even if you are in the offline project

Create a robots.txt file in the following way:

- open the Explorer and navigate to the root folder of your website

- click on the wand icon which opens the Create new resource dialog

- choose "Configurations" from the select box and from the list that appears, choose the SEO configuration type

- save the SEO configuration file as "

robots.txt" - open the newly created file with the content editor and choose the "robots.txt" mode

- use the robots.txt content field to define your exclusion rules; alle other fields have no relevance for the robots.txt mode

In a standard scenario, the same page can be accessed using multiple URLs:

- /my/folder/index.html

- /my/folder/

- /my/folder

Your sitemap.xml will only hold one kind of these URLs per page, but when editors set links, they might choose any of the variants. Thus a page is probably accessed via all three URL variants by website visitors and search engine crawlers might find different variants following cross links. This complicates the evaluation of tracking data, since the same page is tracked under three different URLs. It can also cause ranking issues due to duplicated content in search engines like Google.

To prevent the usage of different URL variants, configure the link finisher in your sitemap configuration. Configuration is done via three sitemap attributes:

template.link.finisher: set the value tofoldernameto bring links to the form "/my/folder", other options are currently not supportedtemplate.link.defaultfiles(optional): contains a comma-separated list of default file names to cut off, defaults to the file names configured under opencms/vfs/defaultfiles in the opencms-vfs.xml configuration file.template.link.finisher.exclude(optional): A regular expression to prevent certain paths from going through the link finisher if they match

The link finisher is applied to all links in WYSIWYG editor fields (more precisely to all links in fields of type OpenCmsHTML) and links wrapped via <cms:link> or accessed through the CmsJspLinkWrapper in JSP code.

The link finisher is a post processor for links. That means the original links will remain in the content and only be rewritten when rendered. Since the configuration is done in the sitemap, it holds only for pages in that sitemap, i.e., link finishing is done relative to the context of the current page.

For performance reasons, the link finisher does not take changed default file names set via the property "default-file" into account, but only the system defaults. Reconfiguration of default files must be done via the template.link.defaultfiles sitemap attribute.

The link finisher is available since OpenCms 20.